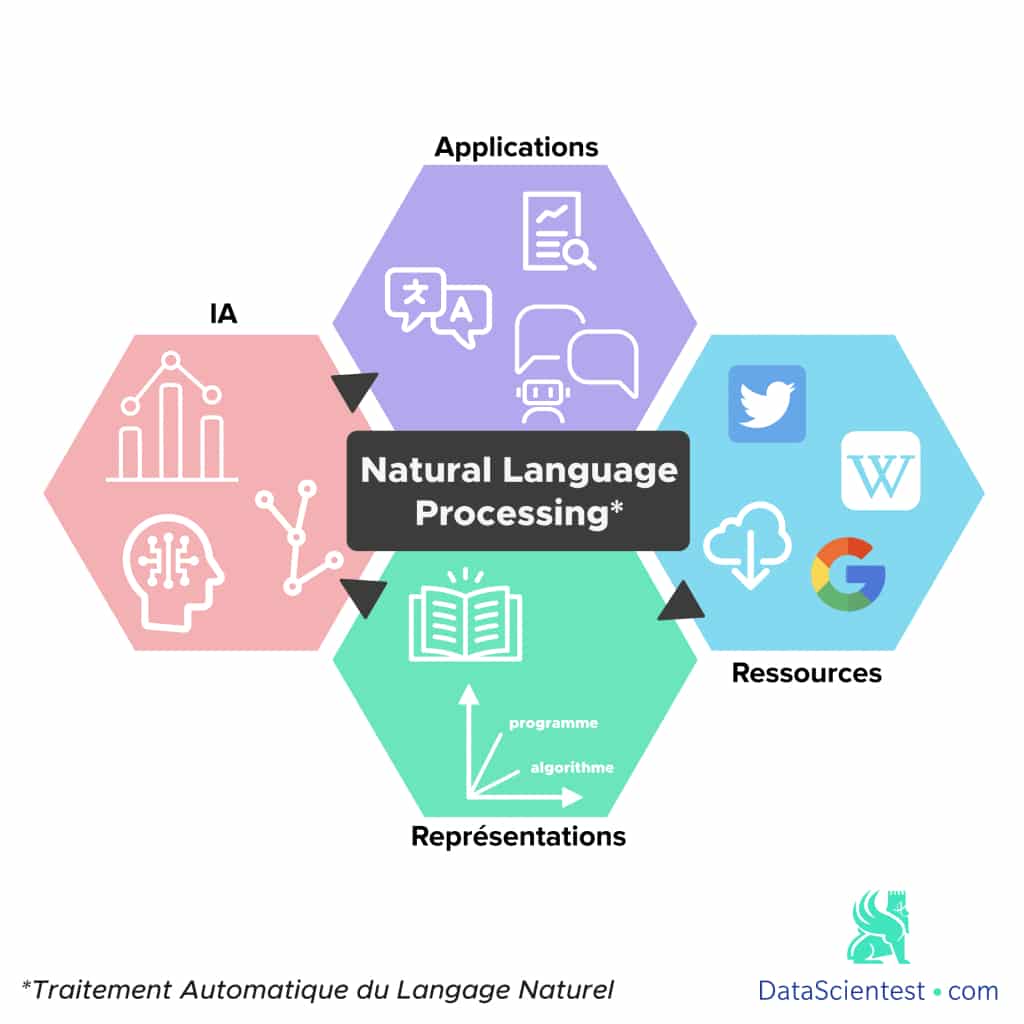

NLP for Natural Language Processing is a discipline that focuses on the understanding, manipulation and generation of natural language by machines. Thus, NLP is really at the interface between computer science and linguistics. It is about the ability of the machine to interact directly with humans.

NLP is a fairly generic term that covers a very wide range of applications. Here are the most popular applications:

The development of machine translation algorithms has truly revolutionized the way texts are translated today. Applications, such as Google Translate, are able to translate entire texts without any human intervention.

Because natural language is inherently ambiguous and variable, these applications do not rely on word-for-word replacement, but require true text analysis and modeling, known as Statistical Machine Translation.

Also known as “Opinion Mining“, sentiment analysis involves identifying subjective information in a text to extract the author’s opinion.

For example, when a brand launches a new product, it can use the comments collected on social networks to identify the overall positive or negative sentiment shared by customers.

In general, sentiment analysis is a way to measure the level of customer satisfaction with the products or services provided by a company or organization. It can even be much more effective than traditional methods such as surveys.

Indeed, if we are often reluctant to spend time answering long questionnaires, a growing part of consumers nowadays frequently share their opinions on social networks. Thus, the search for negative texts and the identification of the main complaints make it possible to improve products, adapt advertising and reduce the level of customer dissatisfaction.

Understand the challenges addressed in NLP

Marketers also use NLP to find people who are likely to make a purchase.

They rely on the behavior of Internet users on websites, social networks and search engine queries. This type of analysis allows Google to generate a significant profit by offering the right advertisement to the right people. Each time a visitor clicks on an ad, the advertiser pays up to 50 dollars!

More generally, NLP methods can be used to build a rich and comprehensive picture of a company’s existing market, customers, issues, competition, and growth potential for new products and services.

Raw data sources for this analysis include sales logs, surveys and social media…

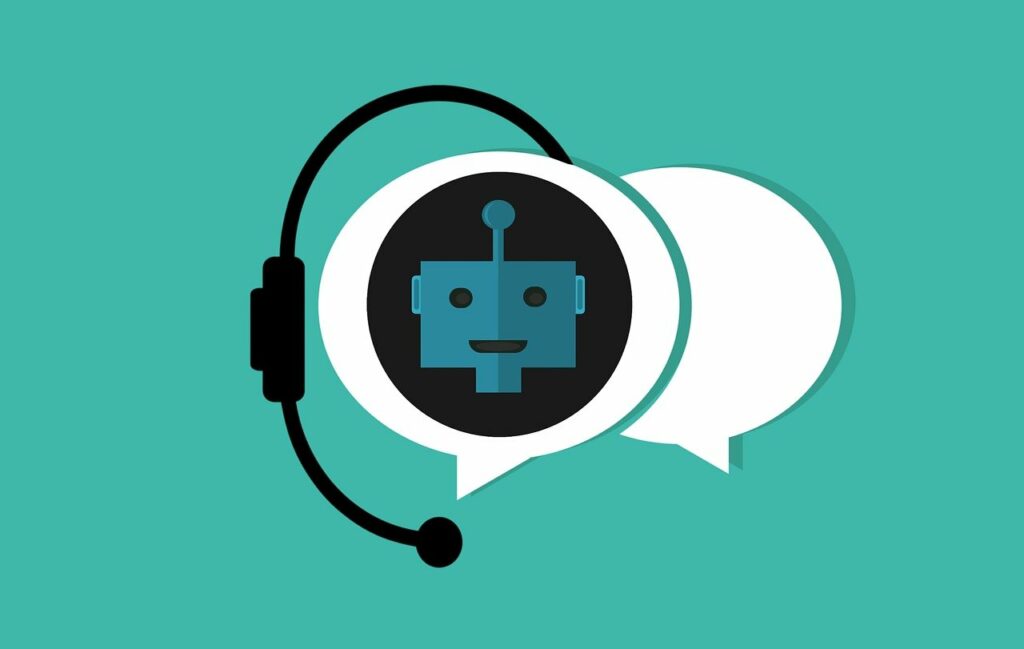

NLP methods are at the heart of how today’s chatbots work. While these systems are not completely perfect, they can now easily handle standard tasks such as informing customers about products or services, answering their questions, etc. They are used across multiple channels, including the Internet, applications and messaging platforms. The opening of the Facebook Messenger platform to chatbots in 2016 contributed to their development.

Broadly speaking, we can distinguish two aspects that are essential to any NLP problem:

Master the different methods in NLP

In the following, we will discuss these two aspects, briefly describing the main methods and highlighting the main challenges. We will use a classical example: spam detection.

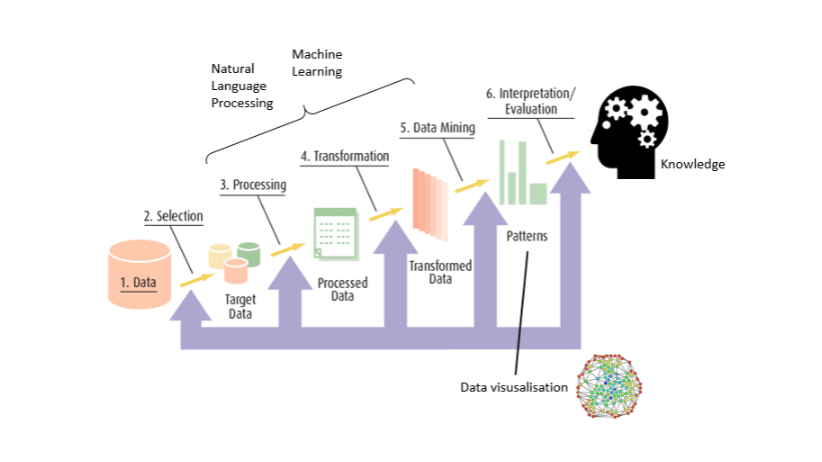

Let’s assume that you want to be able to determine whether an e-mail is spam or not, based on its content alone. To do this, it is essential to transform the raw data (the text of the email) into usable data.

The main steps include:

In order to apply Machine Learning methods to natural language problems, it is necessary to transform textual data into numerical data.

There are several approaches but the main ones remains the Ter-Frequency. This method consists in counting the number of occurrences of tokens present in the corpus for each text. Each text is then represented by a vector of occurrences. This is generally referred to as a Bag-Of-Word.

Nevertheless, this approach has a major drawback: some words are by nature more used than others, which can lead the model to erroneous results.

Term Frequency-Inverse Document Frequency (TF-IDF): this method consists in counting the number of occurrences of tokens present in the corpus for each text, which is then divided by the total number of occurrences of these same tokens in the whole corpus.

For the term x present in the document y, we can define its weight by the following relation:

Where :

Thus, this approach provides a vector representation for each text that includes vectors of weights rather than occurrences.

The efficiency of these methods differs according to the application case. However, they have two main limitations:

There is another approach that allows to remedy these problems: Word Embedding. It consists in building vectors of fixed size that take into account the context in which the words are found.

Thus, two words present in similar contexts will have vectors closer (in terms of vector distance). This allows us to capture both semantic, syntactic or thematic similarities of words.

A more detailed description of this method will be given in the next section.

Master the vectors in Machine Learning

Overall, we can distinguish 3 main NLP approaches: rule-based methods, classical Machine Learning models and Deep Learning models.

In the case of spam detection, this could consist of considering as spam emails, those that contain buzzwords such as “promotion”, “limited offer”, etc.

However, these simple methods can be quickly overwhelmed by the complexity of natural language and prove to be inefficient.

These models generalize even better than classical learning approaches because they require a less sophisticated text preprocessing phase: neural layers can be seen as automatic feature extractors.

This makes it possible to build end-to-end models with little data preprocessing. Apart from the feature engineering part, the learning capabilities of Deep Learning algorithms are generally more powerful than those of classical Machine Learning, which allows obtaining better scores on various complex hard NLP tasks such as translation.

The rules that govern the transformation of natural language text into information are not easy for computers to understand. It requires understanding both the words and the way the concepts are related to deliver the desired message.

In natural language, words are unique but can have different meanings depending on the context, resulting in lexical, syntactic and semantic ambiguity. To solve this problem, NLP proposes several methods, such as context evaluation. However, understanding the semantic meaning of words in a sentence is still a work in progress.

Another key phenomenon in natural language is that we can express the same idea with different terms that also depend on the specific context.

For example, the terms “big” and “wide” may be synonymous in describing an object or a building, but they are not interchangeable in all contexts: “big” may mean older.

Correference tasks involve finding all expressions that refer to the same entity. This is an important step for many high-level NLP tasks that involve whole-text understanding, such as document summarization, question answering, and information extraction. This problem has seen a revival with the introduction of state-of-the-art Deep Learning techniques.

Depending on the author’s personality, intentions and emotions, the same idea can be expressed in different ways. Some authors do not hesitate to use irony or sarcasm, and thus convey a meaning opposite to the literal one.

So while humans can easily master a language, the ambiguity and imprecise characteristics of natural languages are what make NLP difficult for machines to implement.

This content has been reposted from DataScientest.com for informational purposes only.